Using AI & Neutral Networks in Motion Graphics

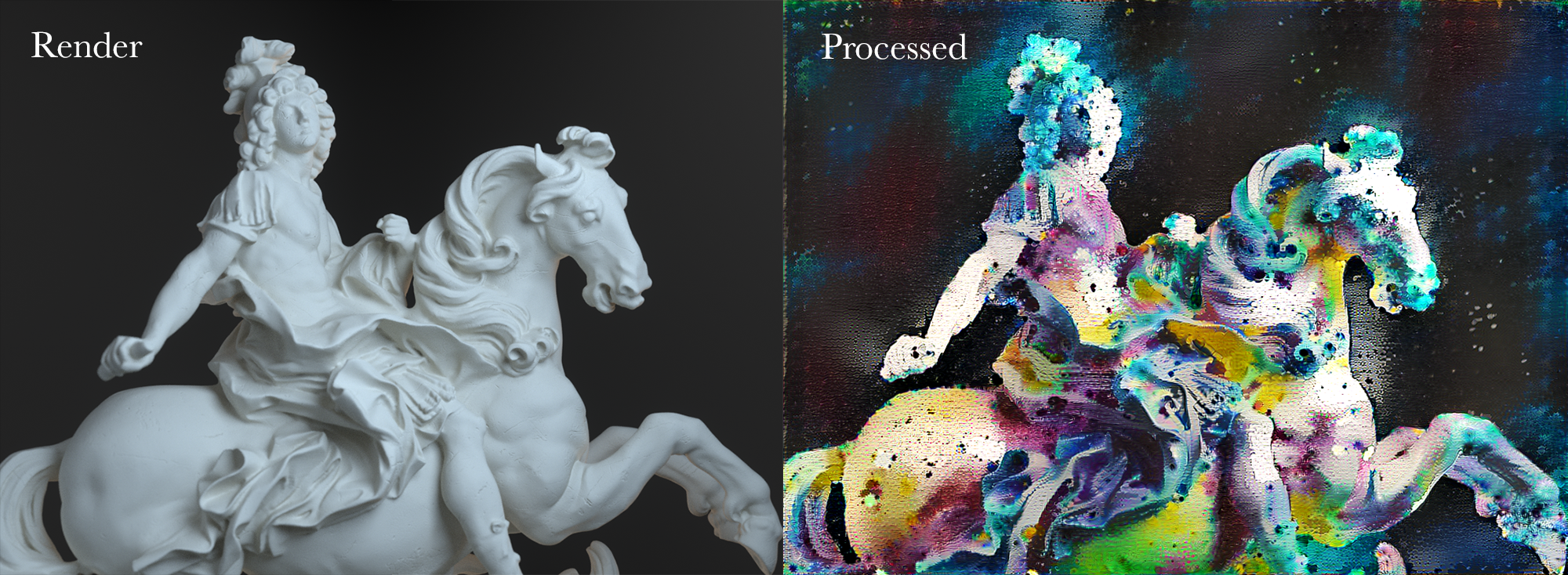

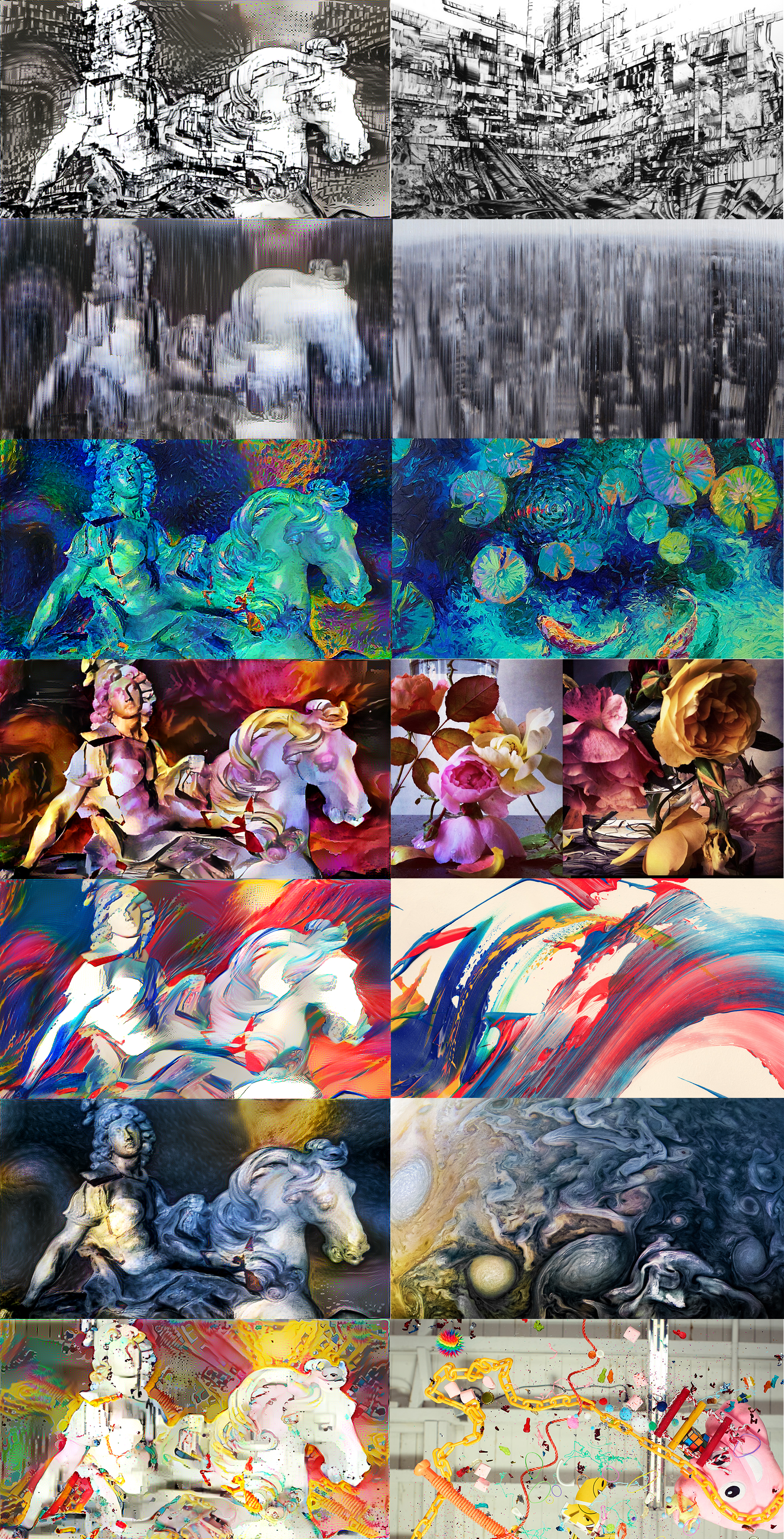

Using a process called style transfer a Neutral Network based AI can analyze an image or artwork and attempt to transfer the style of that art onto your input image. I first noticed this in 2018 when I saw people online making random images look like Van Gogh’s Starry night painting. Some of them looked bad and didn’t seem to work very well but others were uncanny and I was compelled to find out how it was done. I spent a long year or 2, nights and weekends, learning about this process. I learned ways it could be used in practical motion graphics applications and added into traditional workflows to make really amazing additions to projects. Around this time I accepted an offer to creative direct at a company I really love working at and this was put on pause.

What I’m posting here was not meant to be public, it was meant to be a reference library for me to see which images worked well and which didn’t, along with some early motions tests I did. But it’s just been sitting on a hardware for almost 2 years now not being used, so I thought it would be fun to share. I still have plans and some projects in the works that make really interesting uses of this process if I ever get the time to return to it.